Abstract

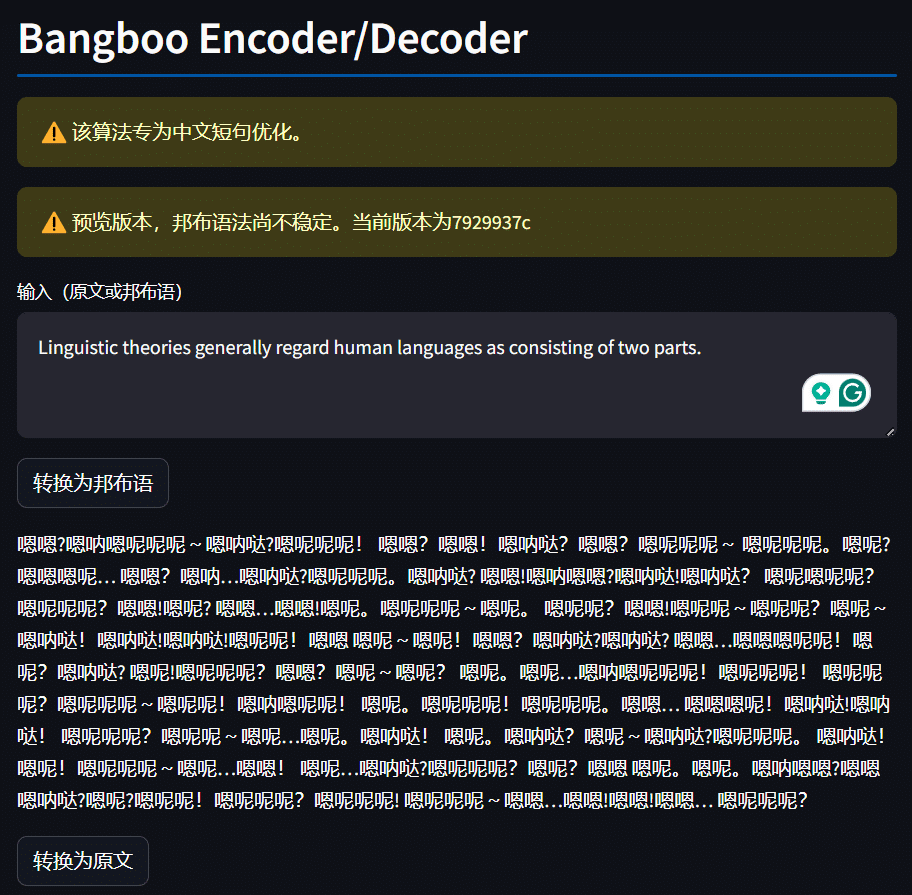

This article talks about an algorithm that encodes a plaintext message to a target lexicon. The lexicon can be constructed as a set of words from a hypothetical language to attain a fluent and natural result (The example here used Bangboo squeaks from Zenless Zone Zero; similar examples are cat meows, dog barks, etc.). The result can be seen as follows.

The lexicon can be replaced with any set of symbols. If you want to construct a cat language generator, use words such as ["Meow", "Miaow", "Meeeow"] as the lexicon. The output will be a sequence concatenated with these lexicons (and spaces)

Implementations

The Bangboo Encoder can be found at https://bangboo.sakana.moe

The source code can be found at https://gitlab.com/MetricVoid/bangboo

Goals

This algorithm targets encoding short sentences into a sequence with words from the lexicon. The goal is to make the encoding as short as possible.

Input Data

The algorithm depends on the following inputs.

$I$: The plaintext input sequence, seen as a sequence of bytes.

$L$: The lexicon of the target language, an array of symbols. $L[i]$ means the $i$-th symbol in the lexicon. The lexicon must follow certain rules, see Constructing The Lexicon.

$zdict$: The preset DEFLATE dictionary. A sequence of bytes that contains common byte sequences seen in $I$. Usually, if $I$ is a natural language, $zdict$ will be the common words and phrases in that language.

Algorithm Flow

The algorithms consist of the following steps.

Step 1. Compression

Firstly, the input data is compressed with a preset dictionary with the DEFLATE algorithm.

$$C = DEFLATE(I, zdict)$$

The reason DEFLATE was chosen is because we expect the input to be a short sentence with little repetition. Algorithms such as LZMA are more efficient when encoding large files with repetitions, but they use range encoders, which means they are inefficient when it comes to shorter texts with little repetition.

Compared to running zlib on the entire input with a dictionary generated and embedded into the output, the reason we chose to use a preset dictionary is because, again, the input is expected to be a short sentence with little repetitive information. In ideal cases, with a large dictionary of common words baked into the tool, the compression step will consist mostly of a sequence of pointers to the dictionary, which is more efficient.

Step 2. Base-N Encoding

After the first step, we get a sequence of bytes representing the input. This sequence of bytes is then converted to symbols in the lexicon.

There are two parameters we can tune here: element group size(M) and lexicon size(N). The basic idea is to treat the byte sequence as a huge number, convert it into base-N format, and correspond each digit to a word in the lexicon. However, the output is usually massive if treated as a single number, preventing efficient modulo and division operations. Therefore, the output sequence $C$ is divided into numerous smaller numbers, each consisting of M bytes. If $C$ is not a multiple of M bytes, zeros will be added as padding. Since N is usually not an integer power of 2, a larger group size M could help efficiency by utilizing more of the fractional bits, but it would also lead to longer padding if $len(C)$ is not a multiple of M.

Say the compressed byte sequence consists of numerous M-byte sequences, which can be represented as

$$C = \overline{C_1C_2C_3...}$$

Then, the Base-N encoding of C can be represented as a vector E

$$E = \langle C_{1_{[N]}}, C_{2_{[N]}}, C_{3_{[N]}}... \rangle$$

By converting each M-byte chunk into Base-N representation.

Step 3. Correspond encoding to the lexicon

The final step is simply a lookup of all the digits in the abovementioned Base-N number and corresponding them to items in the lexicon.

Write vector E as follows

$$ E = \langle E_1, E_2, E_3, ... \rangle $$

For each element in $E$, represent it in digits

$$ E_1 = \overline{E_{1_1}E_{1_2}E_{1_3}...} $$

The Lexicon Encoding for $E_1$ is therefore

$$ L_1 = \overline{L[E_{1_1}]L[E_{1_2}]L[E_{1_2}]...}$$

The final result $R$ is every element in $E$ converted into lexicon encoding.

$$R = L_1 + SPACE + L_2 + SPACE + L_3 + ...$$

$SPACE$ can be the space character here. Or it can be a special word reserved from the lexicon for purely separation purposes.

Constructing The Lexicon

Rules

The lexicon $L$ must follow specific rules.

- There must be no repeated words or symbols.

- No symbol should contain $SPACE$, unless it is specifically reserved for that purpose.

- No symbol should be a concatenation of other symbols.

- [A, B, C] is a valid lexicon.

- [A, B, ABC] is also a valid lexicon.

- [A, B, C, ABC] is invalid since ABC=A+B+C. The sequence 'ABC' in the encoded result is ambiguous since it can either be decoded as A+B+C or ABC.

- It is also worth noting that during decoding, subwords should be matched with the encoded sequence from the longest to the shortest. For example, If A+B is consumed from the sequence 'ABC,' the leftover sequence 'C' cannot be matched with anything in the lexicon, generating errors.

Tricks

Some tricks can be used to increase the size of the lexicon (thus attaining a shorter encoded output sequence) without affecting output length.

- Adding invisible (but copiable) characters such as zero-width spaces.

- Repeat sequences with similar characters, such as '!' and '!‘

Comments NOTHING